Project development prototype

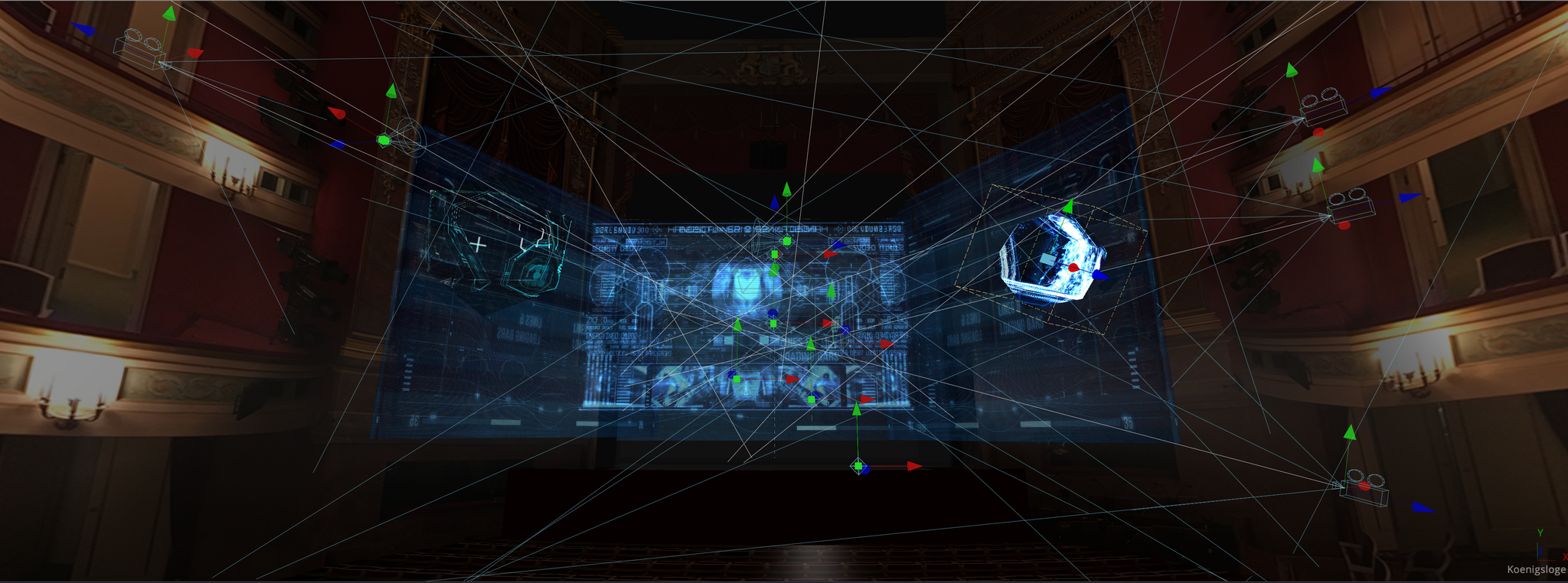

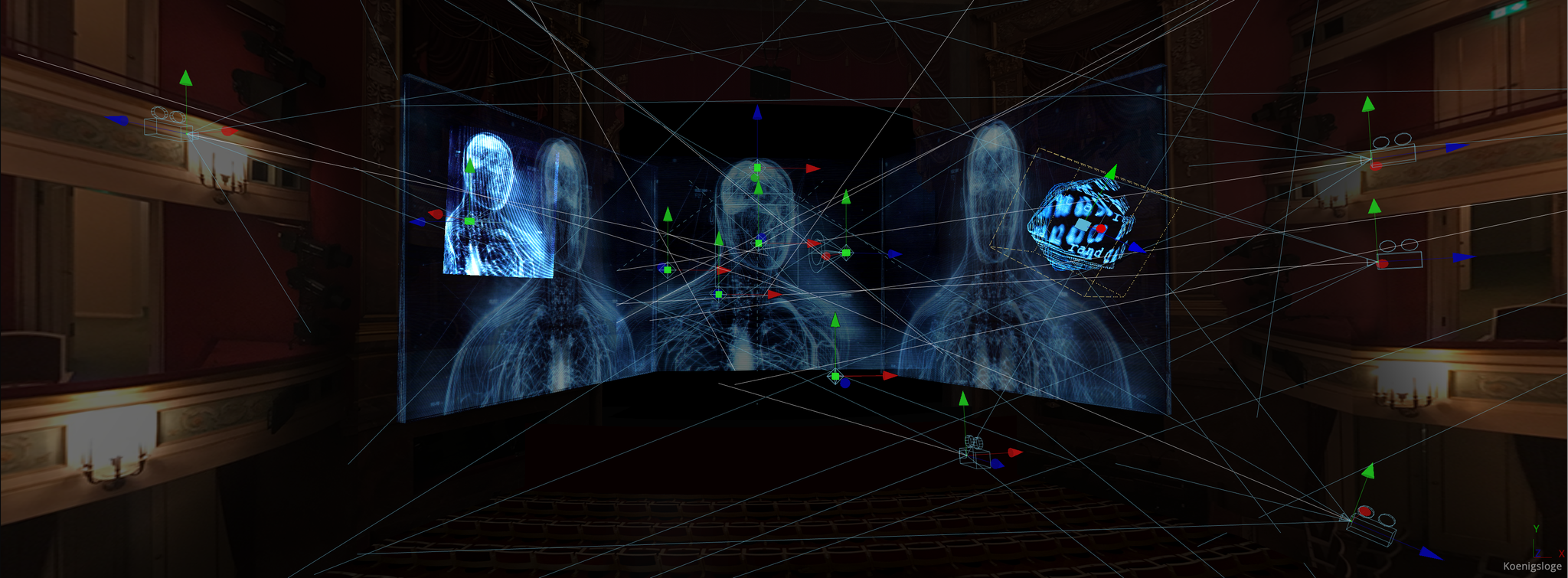

Mensch Maschine Cyborg dancing (Unreal Engine goes to Theater, The World in 3D)

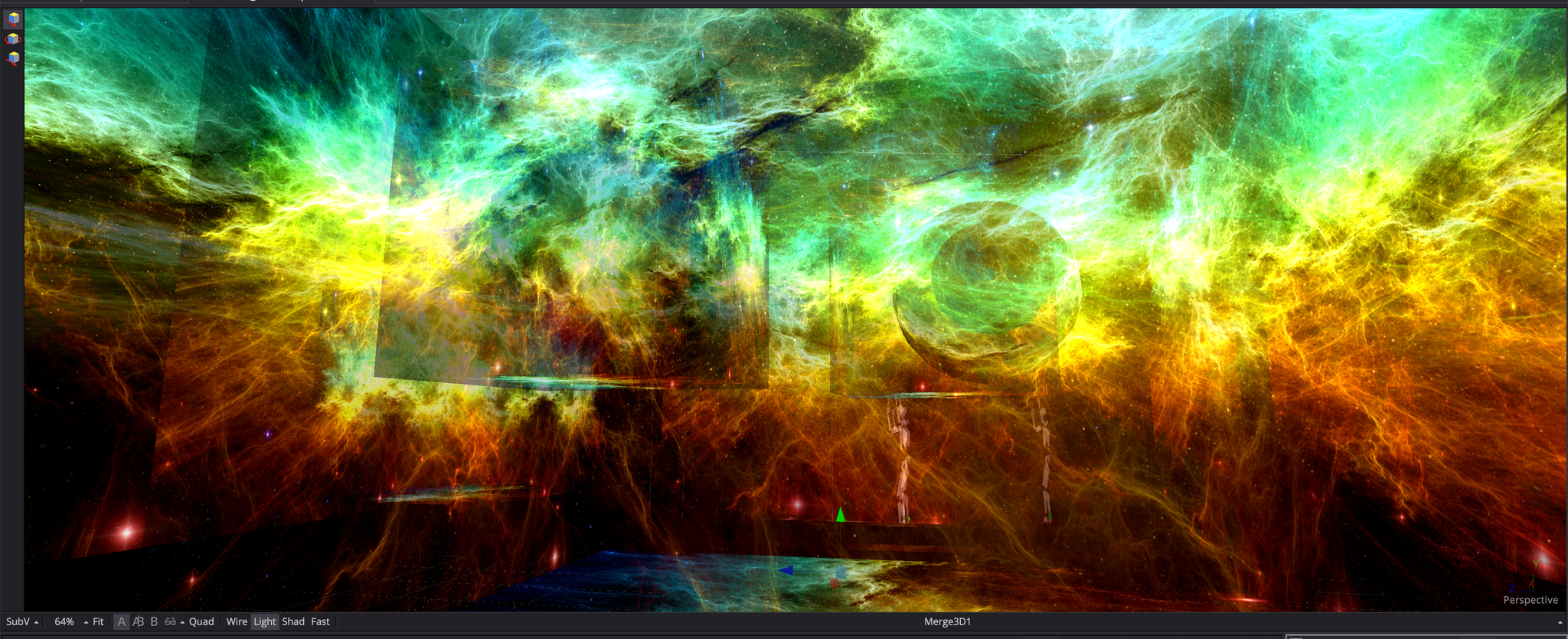

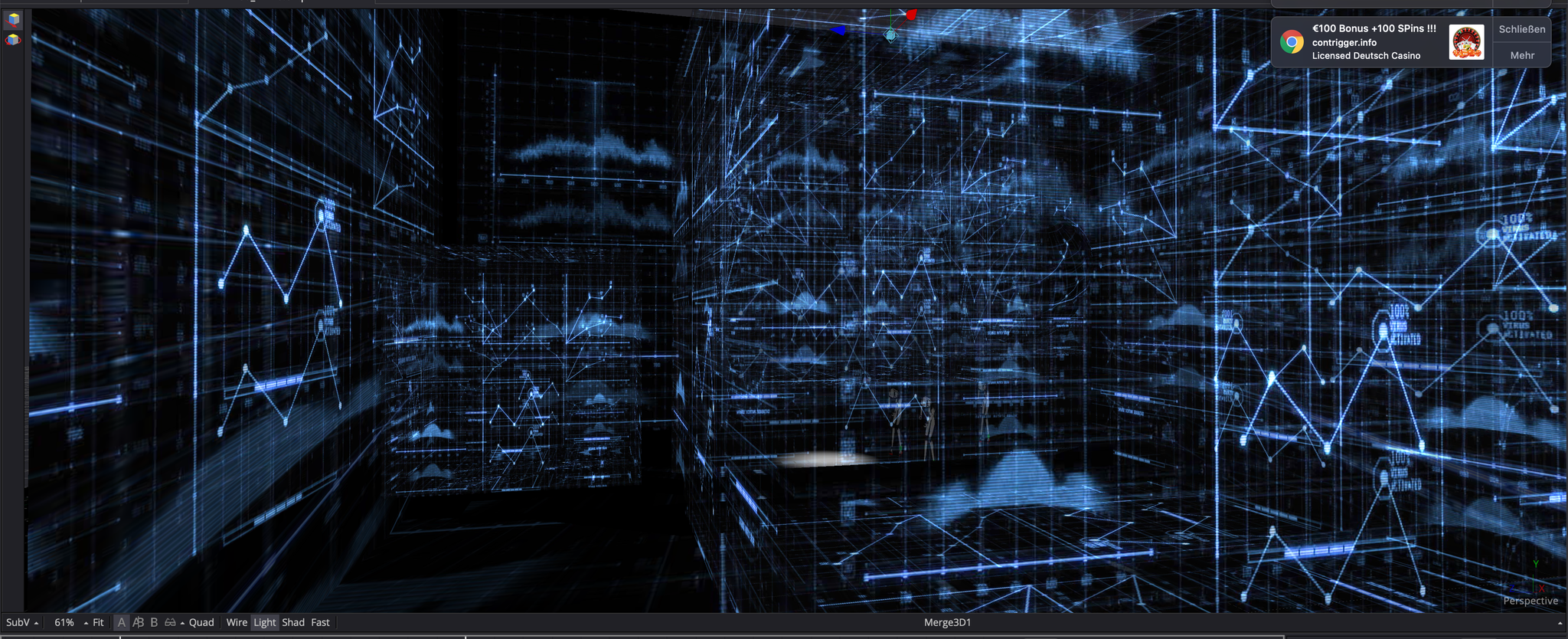

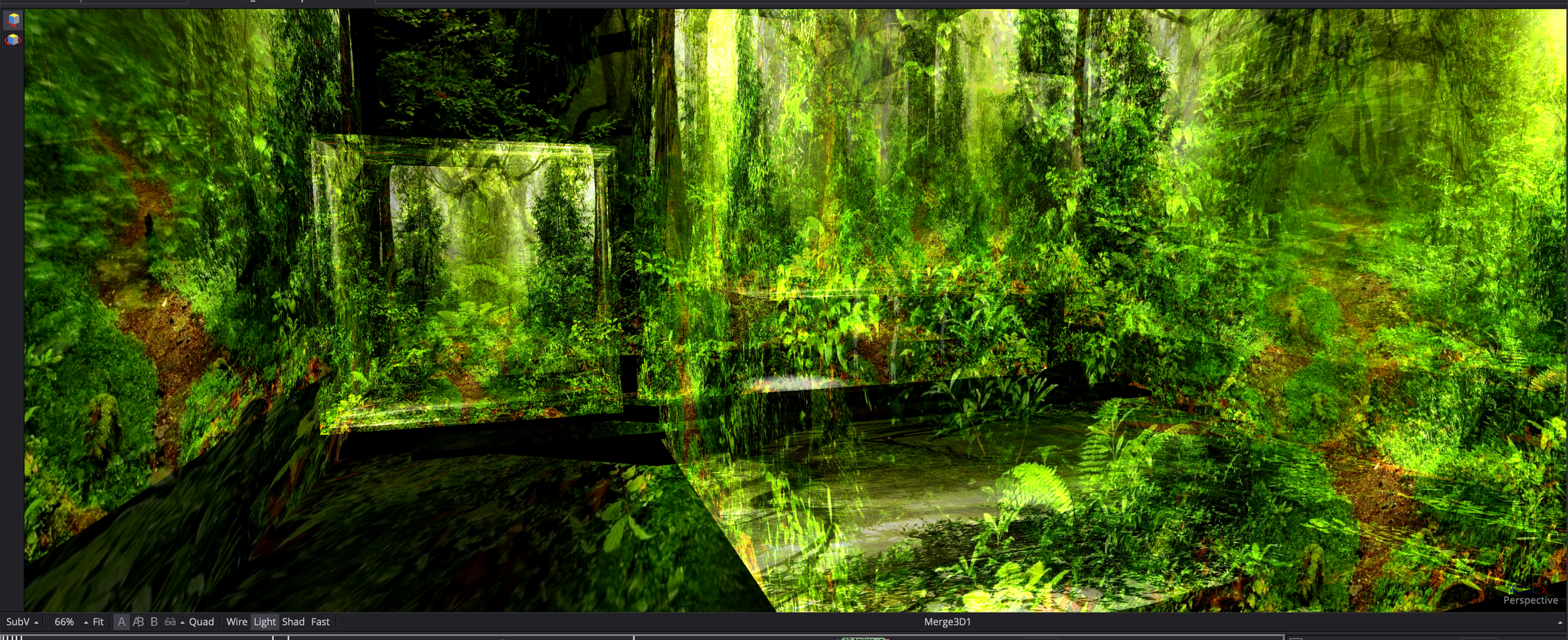

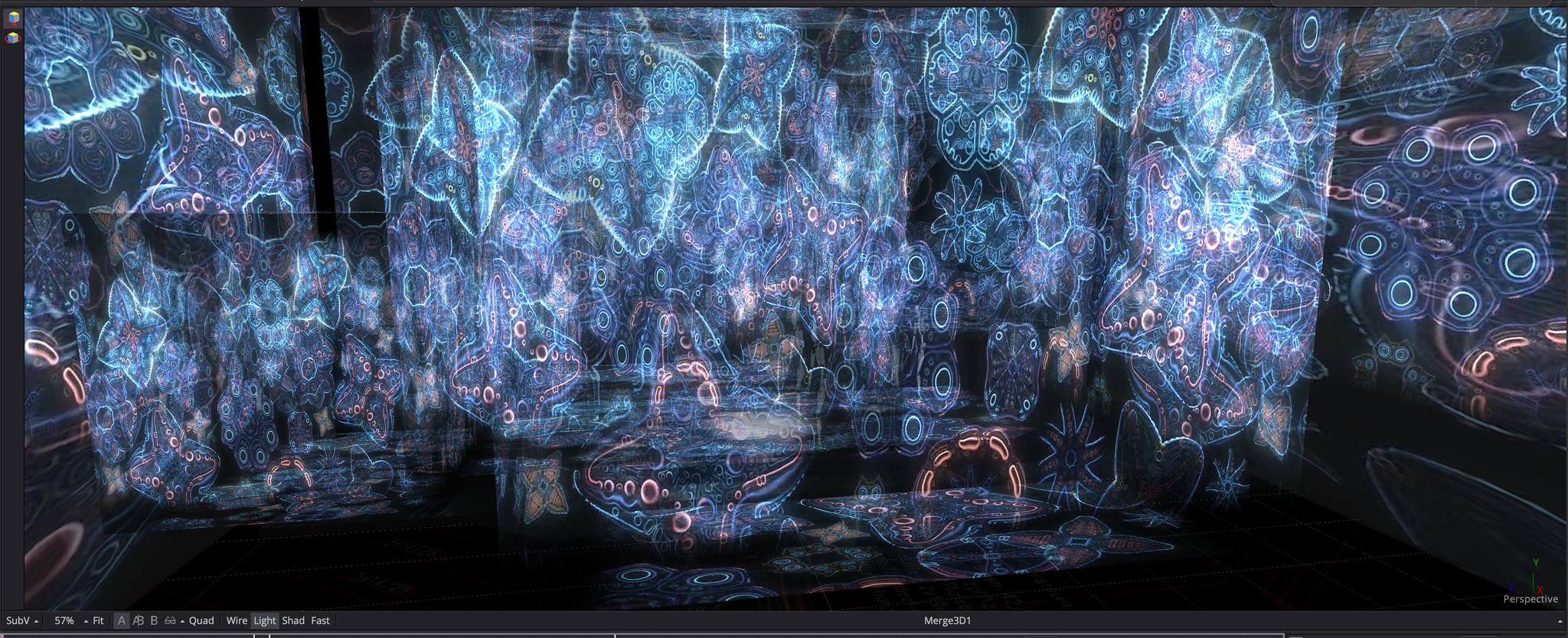

Interactive immersive motion controlled VR / 3D video dance performance

This project is funded

by the FFF Bayern.

FFF Bayern - award decision XR of June 25, 2020 FFFF Bayern

Award decision Extended Realities XR from June 25th, 2020: Project development XR

Mensch Maschine Cyborg Dancing Applicant: Angelika Maria Meindl Mahnecke,

MunichPlatforms: VR experience for Oculus Rift 3D video

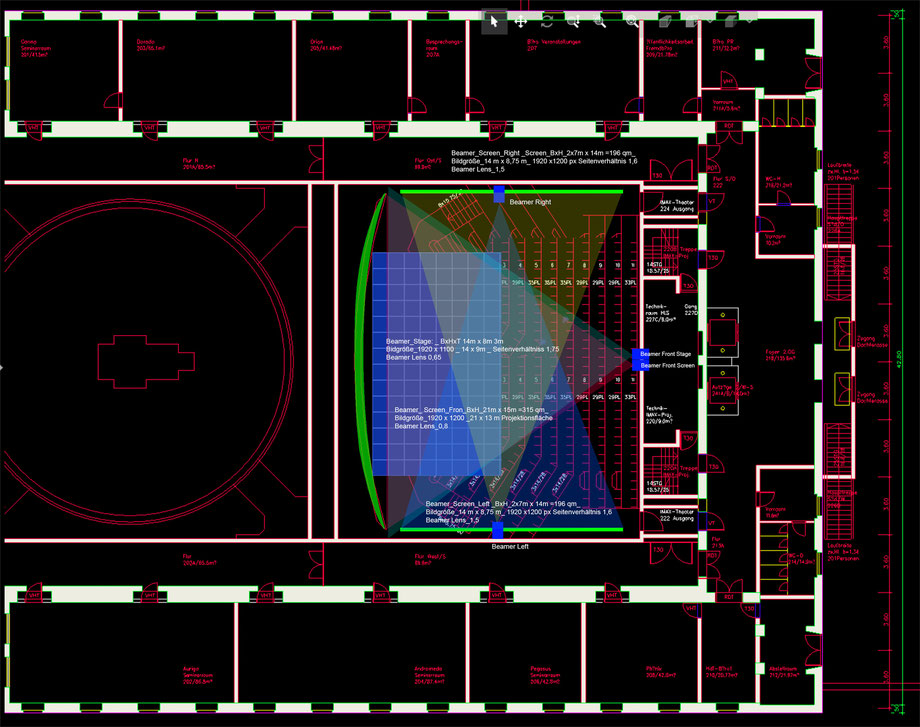

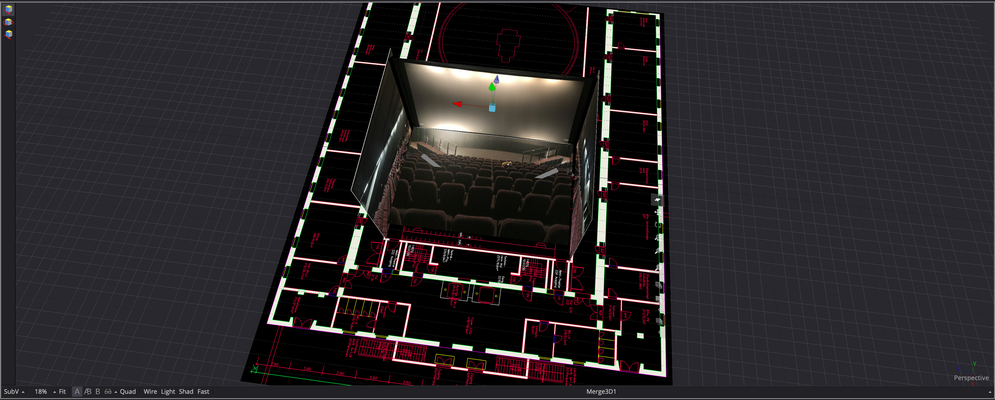

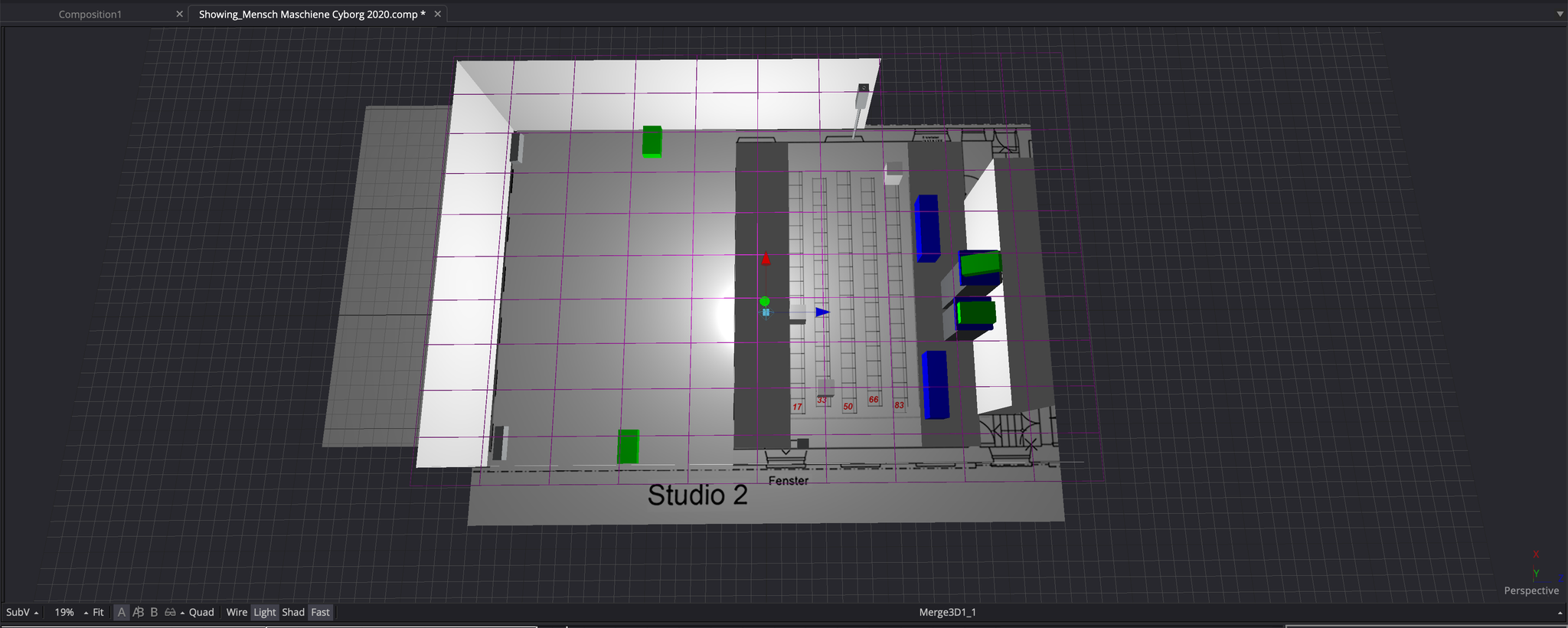

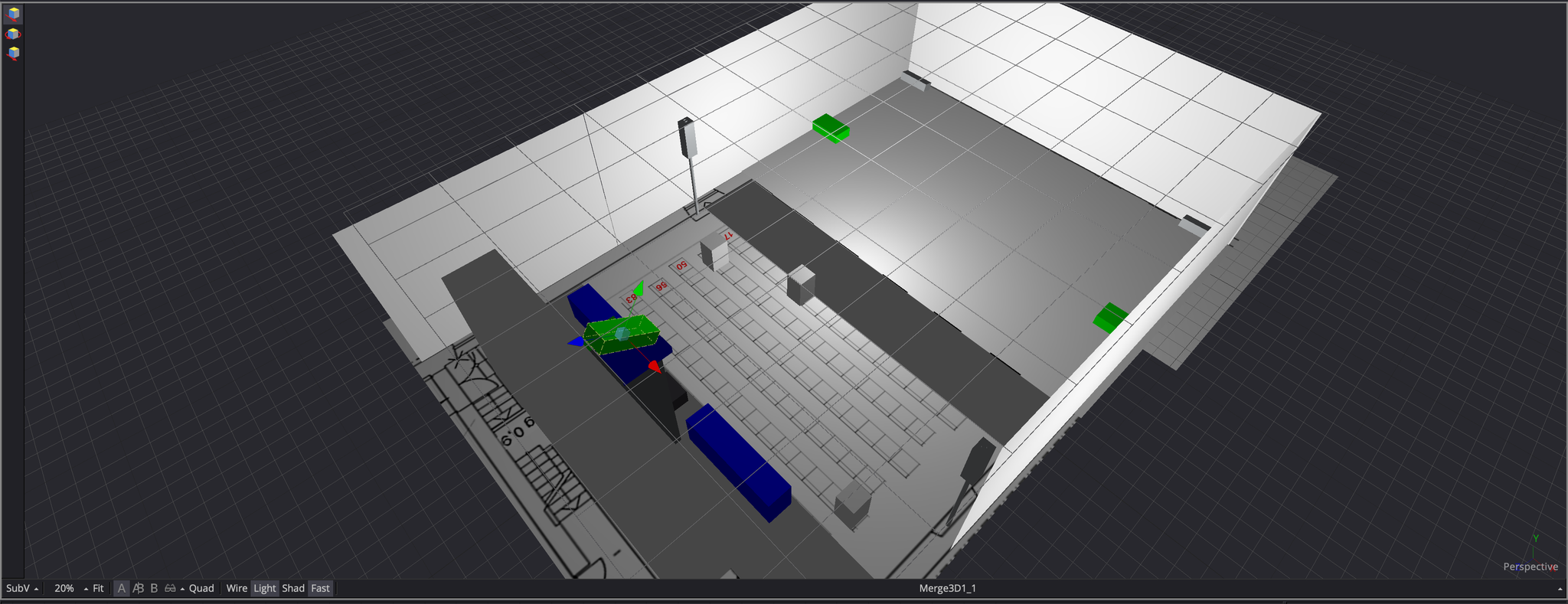

dance performance should be made possible. In terms of content, the performance deals with the conflict between the technology-driven pursuit of perfection and efficiency as well as the longing of the individual to find and experience himself. Using 3D projectors and a three-dimensional sound field, the dispositive of the stage and auditorium is to be lifted and a constantly changing space is created. Visual and auditory elements should interact with the movements of the dancers via sensors.

Key staff / partnerships

We looked for partners and formed partnerships to develop this project together.

Key staff

- Art Director: Angelika Maria Meindl Mahnecke

- Visuel Design: Tobias Gremmler: website

- Technical Director: Thomas Mahnecke link

- Produktionsleitung: Claudia Mittermaier

- Sound Design: Fred Lutz

Partnerships

Purpose of the project:

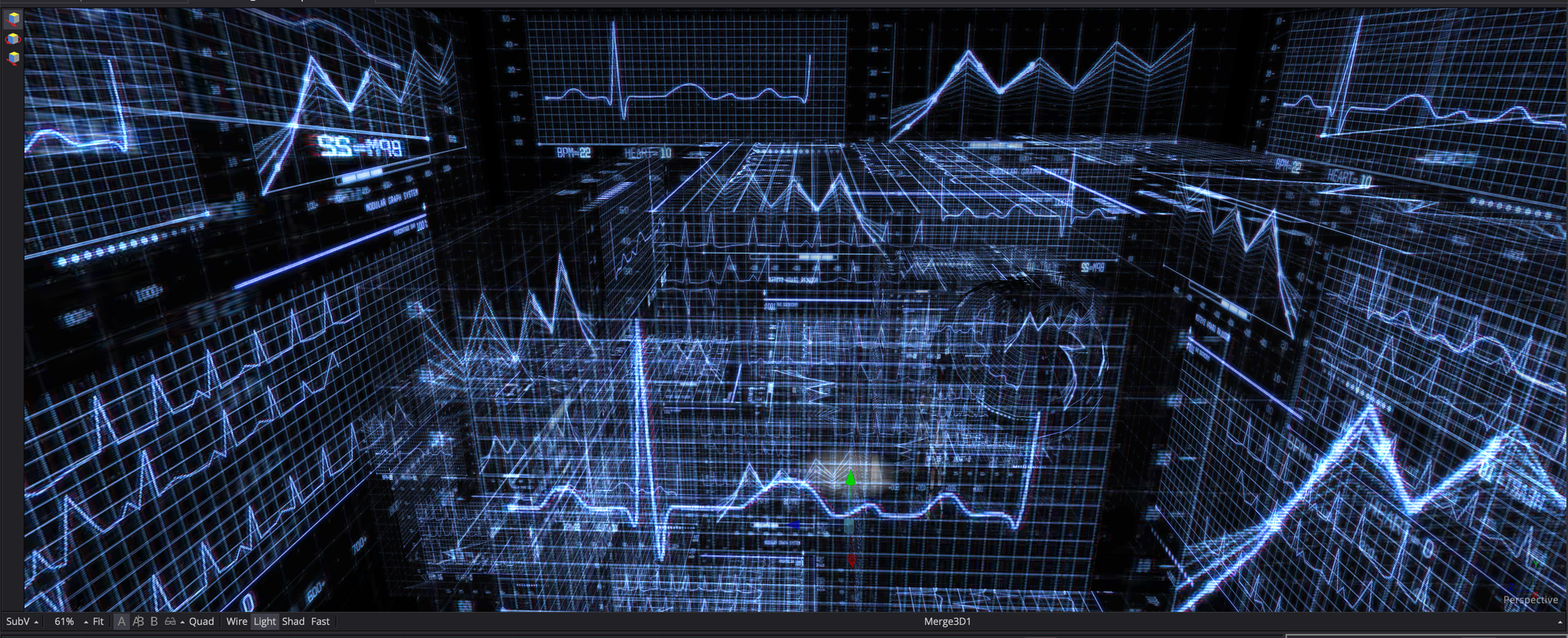

Each of our current decisions influences our future and is in constant motion and change. Nothing stands still, but we move with our thoughts and actions like a pull of events, imaginations and visualizations that manifest and change again, a constant flow without standing still. This meta-level that surrounds us, in which we act and daily visualize our own chain of events, consciously and unconsciously, we let through the installation and performance.

It surrounds the actors and the audience alike.

Our concern is to move away from performances in which the viewer looks at the events on the stage from the outside, to a joint immersion in visionary images in an interactive "real-virtual world" that surrounds the viewer.

This is possible thanks to the interactive and immersive 3D projections surrounding the audience in a three-dimensional sound field. A constantly changing space that does not allow a referential spatial structure, but leaves the space and time structure in suspension, becomes itself an apparently amorphous being that connects with the movements and bodies of the dancers.

Vision becomes reality!

Let us imagine that the visual boundaries between live performance on stage, projections and audience dissolve. The space, the stage design and the performers merge into a single, visually perceptible, three-dimensional “hyperworld”. Here in these hybrid, virtual worlds are new, unimagined options for theater and show development. Novel digital and interactive museum multi-user exhibition scenarios and worlds of experience can also be more easily developed and implemented in live events.

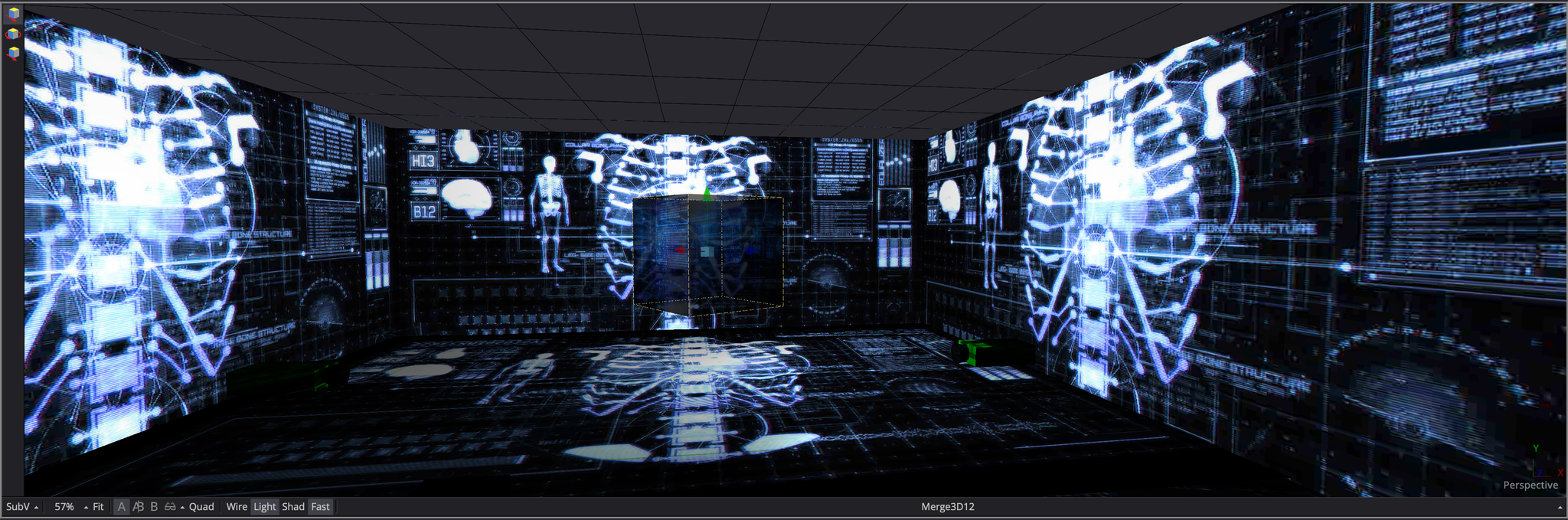

The aim of our "prototype" development is to create the prerequisites for creating a new kind of real / virtual stage world with a real-time representation of the 3D digitally projected stage set with simultaneous interaction with lighting, sound and stage control systems. A real multi-user interaction between the actors on the projection stage and the audience. For directors as well as for choreographers and the audience, almost endless dimensions open up that challenge our perception and inspire the imagination. By using modern motion tracking systems that are linked to digital avatars in real time, e.g. in contemporary dance the choreography of dimensions and movements, the imagination of which only we set limits. Not only dancers and “avatars” are choreographed together, but spaces, projection and actors can hardly be distinguished either. They mix up. Together with them, we dive into the three-dimensional “hyperspace”, in which everything is possible and space and time have dissolved.

why was the technology chosen

Stage designs projected three-dimensionally through 3D projectors or LED walls are currently rarely offered to the public in theater shows, entertainment shows or museums. Only in 3D cinema films can viewers experience these three-dimensional worlds regularly outside of a VR world. This is due on the one hand to the complicated technical possibilities and on the other hand to the extensive production effort. Many different hardware and software systems have to be used for this. In particular, adapting and changing a fully rendered 3D scene for the digitally projected stage design requires a lot of time and therefore high costs. The integration of interactive elements, such as the live use of motion capture systems or other tracking systems and interfaces for the development of interacting scenes, is a technically demanding challenge. Also in museums, more and more real multi-user experiences are in demand, in which several visitors can interact without contact and perceive a 3-dimensional world outside of VR glasses.

To the best of our knowledge, there is no system in which we can simply use a certified hardware in a software environment to create content, change it in real time, correspond with different interfaces, interact and immediately use the "nDisplay" technology on the "stage" in real time can represent.

IMAX Deutsches Museum

Test Showroom:

Video:

Angelika Maria Meindl Mahnecke/ creative director, chroeography

Thomas Mahnecke/ visualizations, technical management

Premiere of Man Machine Part 3 in the "Kraftraum" of the Deutsches Museum in October 2018